Stephen Roddy

“An experimental composer from Ireland, Stephen Roddy proves equally adept at crushing soundscapes as he does mysterious melodies.”

- Bandcamp New & Notable, March 2022.

Embodied Sonification

Project Overview

Sonification is the representation of data with sound and Auditory Display is the use of audio to present information to a listener. In certain contexts and for certain types of data sound can be an effective means of representation and communication. This project involved the development of Sonification and Auditory Display frameworks based on human-centered design principles derived from embodied cognition an area of cognitive science that is critical to our understanding of meaning-making in cognition. The research and development portions of the project were carried out in fulfillment of a PhD degree at the Department of Electronic and Electrical Engineering in Trinity College Dublin under the supervision of Dr. Dermot Furlong. Throughout the project music composition and sound design practices informed by the embodied cognition literature were employed as exploratory research methods. This helped to identify exciting new possibilities for mapping data to sound. These possibilities were then empirically tested to confirm their efficacy for sonification tasks. A number of data-driven musical works were created during the research process.

Coding & Technology

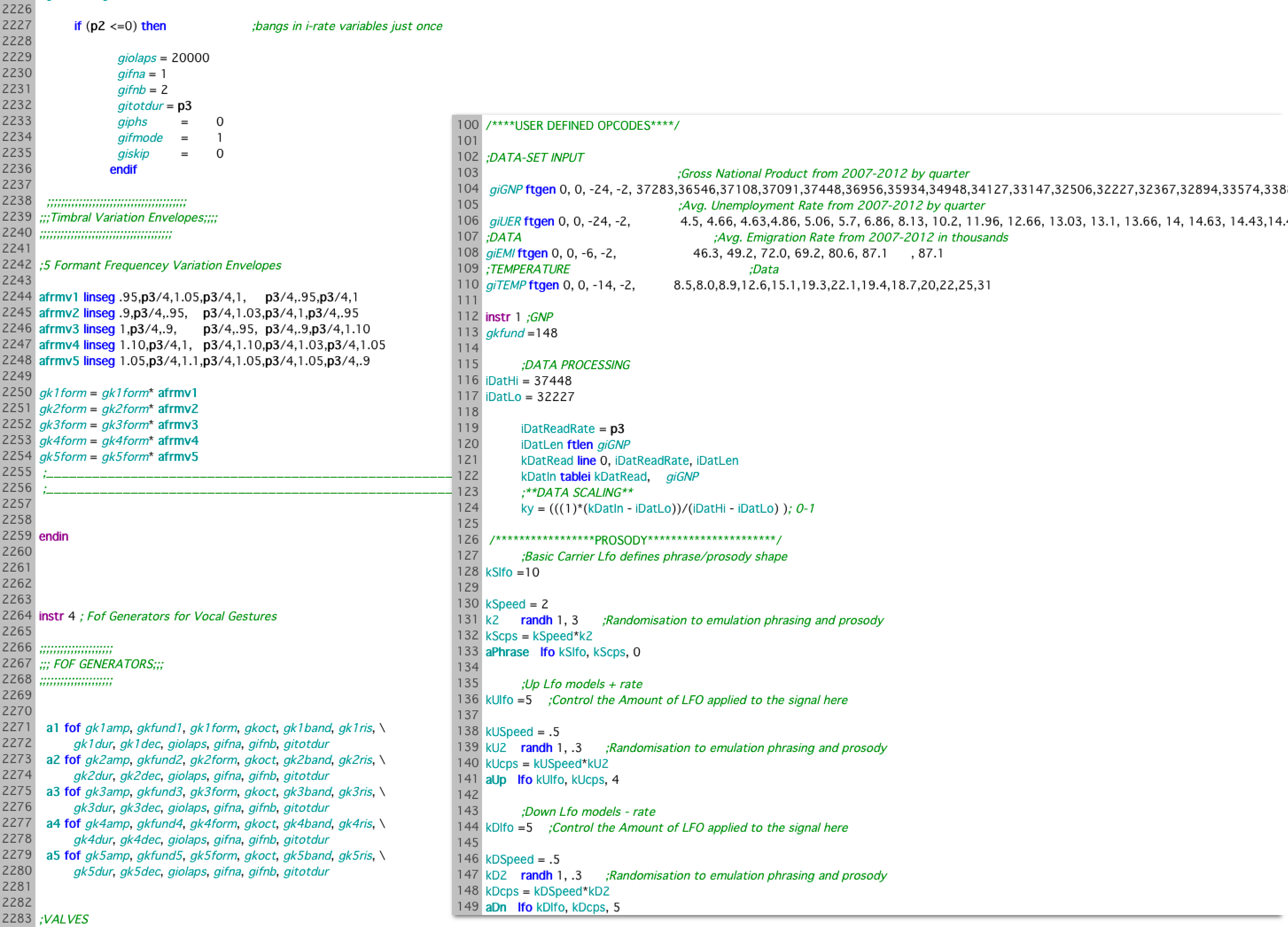

The majority of the project was coded in Csound a programming language for audio written in C. Many of the stimuli used experiments and evaluations were also produced in Csound. Additional sounds and stimuli were produced in Native Instruments Reaktor 5 and production work was carried out int the Logic Pro X DAW. Rapid prototyping platforms were developed in Csound and used to prototype new ideas in an efficient manner. The two prototyping platforms below were developed using fof (fonction d’onde formantique’) synthesis methods and generate simulated vocal gestures.

Data to Sound

The project involved the development of new techniques and frameworks for mapping a range of datatypes to sound in order to represent changes in the original data set. Physical and spatial datatypes like temperature, distance, height, weight, speed, amount, texture, etc. were used in the project.Weather data was also used. As the project progressed the focus fell increasingly on socio-economic data

The first phases of the project dealt with the concept of ‘polarity’. It examined whether listeners interpret increases in a sonic dimension (e.g. pitch, tempo) to increases in the data represented or decreases in the data represented. I investigated how this operated for ‘numerical data’, (i.e. data that is a count of some specific thing like population, chemical concentration, RPM, etc.), and attribute data, (i.e. data that describes some attribute of a thing like size, depth, mass, etc.).

Pure Sine Tone Examples:

Increasing Frequency | Decreasing Frequency | Increasing Tempo | Decreasing Tempo

The second phase explored the kinds of data that can be best represented with simulated vocal gestures. This phase made use of the vocal gesture prototyping platforms.

Effective Vocal Gesture Examples:

Texture | Amount | Speed | Size Spatial Extent | Tension | Anger

The third phase examined how soundscapes can be used to represent data. Initial soundscape elements were synthesised and mapped to data using prototyping platforms in Csound but this approach was abandoned in favour of soundscape recordings that could be manipulated and mapped to data. This section explored how the concepts of conceptual metaphor and blending can be applied to design better soundscape sonifications.

Soundscape Sonification:

Sonification of Irish GDP from 1979 to 2013 | Emigration Low | Emigration High | Poverty Low | Poverty High

The final phase explored how doppler shifting can be used to add a sense of temporal context to a sonification:

Temporospatial Motion Framework:

Original Framework | Refined Framework

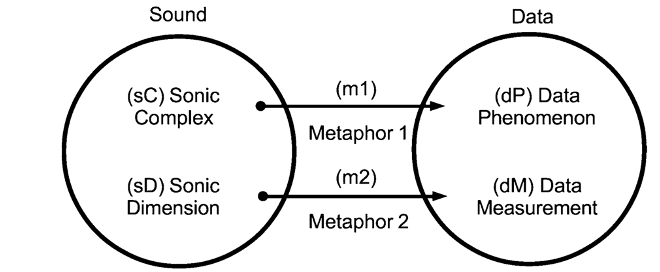

The Embodied Sonification Listening Model

The project proposes Embodied Sonification Listening Model, or ESLM (presented above), as a means for describing how meaning emerges in sonification listening, from an embodied perspective. The model is proposed early in the thesis alongside supporting empirical results from experimental investigations of its applicability.The implementations and evaluations in the remainder of the thesis are designed with this model in mind.

The ESLM asserts the following:

- Listeners attend to the sound as though it were the data during sonification listening.

- The sound is experienced as a metaphorical representation of the data.

- Two metaphors involved in this process.

- In the first, the sound heard is identified with the original data source.

- In the second changes in the sound are identified as changes in the data from the original source.

- Crucially, this entire process is mediated by the listener’s background of previous knowledge.

At a given time t a listener attending to a sonification ƒ(t) will associate the sound they are hearing (the sonic complex sC), with the phenomenon of which they imagine the represented data to be a measurement (the data phenomenon dP). This constitutes the first metaphorical mapping (m1). The second metaphorical mapping (m2) involves the association of changes along dimensions within the sonification (sonic dimension, sD) with changes in the original dataset (measurement dimension, dM). These mappings are further constrained and modulated by the listener’s embodied knowledge, eK. This contains the listener’s understanding of the sound, the data, any instructions or training they have received regarding the sonification and any associations, conscious or unconscious, the listener draws between or to these elements. An embodied sonic dimension is defined here as any individual sonic aspect that a listener can attend to as a meaningful perceptual unit which remains identifiable while evolving in time along a continuous bi-polar axis. An embodied complex is defined as any perceptual grouping that contains multiple embodied sonic dimensions and can also be identified by a listener as a meaningful perceptual unit.

Research Methods

The project applied empirical research methods and involved many rounds of evaluation collecting and analysing both quantitative and qualitative data. It involved both user-centric HCI methods (e.g. user evaluations, A/B testing, surveys etc.) and more traditional psychological experiments designed to gauge users judgements of stimuli. Some pilot testing was done in with small in person groups but the majority of the testing was carried out online in order to sample from a large international group of users.

Findings

Overall the project found that designing sonifications on the basis of principles from the field of embodied cognition generally leads to more effective solutions. The project provides support for the Embodied Sonification Listening Model, which reconciles embodied cognition principles with the task of “sonification listening”: listening to a sound in order to extract information about the dataset it represents. For a more detailed description of findings see the finished document.

Research Outputs

This was a multidisciplinary project that resulted a varied range of outputs across a broad range of media, disciplines and venues.

Journal Articles

- Roddy, S. (2020) Using Conceptual Metaphors to Represent Temporal Context in Time-Series Data Sonification. Interacting with Computers

- Roddy, S., & Furlong, D. (2014). Embodied Aesthetics in Auditory Display. Organised Sound, 19(01), 70-77

Conference Papers

- Roddy, S. & Furlong, D. (2018) Vowel Formant Profiles and Image Schemata in Auditory Display. In Proceedings of HCI 2018 4-6 July 2018, Belfast

- Roddy, S. (2017) Composing The Good Ship Hibernia and the Hole in the Bottom of the World. In Proceedings of Audio Mostly 2017 23-26 August 2017

- Roddy, S., & Furlong, D. (2015). Embodied Auditory Display Affordances. In Proceedings of the 12th Sound and Music Computing Conference: National University of Ireland Maynooth (pp. 477-484)

- Roddy, S., & Furlong, D. (2015). Sonification Listening: An Empirical Embodied Approach. In Proceedings of The 21st International Conference on Auditory Display (ICAD 2015) July 8–10, 2015, Graz, Austria. (pp.181-187)

- Roddy, S., & Furlong, D. (2013). Embodied cognition in auditory display. In Proceedings Of the 19th International Conference on Auditory Display, July 6-10 Łódź, Poland ( pp. 127-134)

- Roddy, S., & Furlong, D. (2013). Rethinking the Transmission Medium in Live Computer Music Performance. Presented at the Irish Sound Science and Technology Convocation, Dún Laoghaire Institute of Art, Design and Technology

Musical and Creative Technology Outputs

Data-driven music composition and sound design techniques were used to aid in the design of sonification mapping strategies. These approaches allowed me to find novel and interesting sonic parameters for mapping data. This practice resulted in the production of a number of data-driven sound works in the course of the research project.

These have been gathered into a collection entitled ‘The Human Cost’, named for the socioeconomic data from the 2008 financial crash represented in the pieces and are available from the usual streaming services online:

| Spotify | Apple Music |

You can learn more about these compositions here: The Human Cost Sonifications

Idle Hands features on the album ‘Tides - An ISSTA Anthology’. It was released on the [Stolen Mirror label](http://stolenmirror.com() and collects a many important pieces that were performed during the first 10 years of the Irish Sound Science and Technology Association’s existence. You can learn more about the album and purchase it here: http://stolenmirror.com/2021d01-tides.html

A number of these pieces have been performed live at academic conferences and cultural events at national and international levels including:

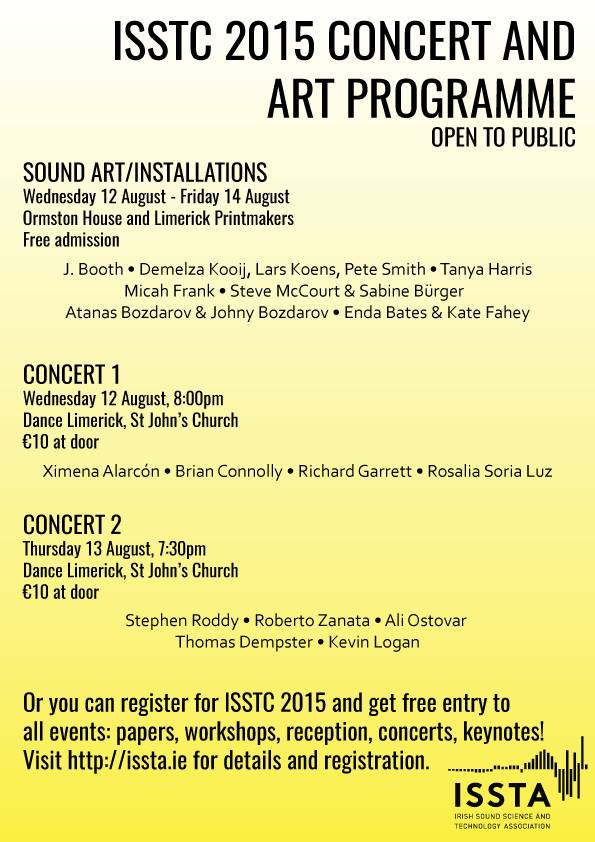

- The Irish Sound Science and Technology Convocation 2014

- The CMC’s 2015 Salon Series in the National Concert Hall Dublin

- The International Conference on Auditory Display Concert 2015

- The Sound and Music Computing Conference 2015

- The Irish Sound Science and Technology Conference 2015

- The International Conference on Auditory Display 2017

- Audio Mostly 2017

Embodied Sonification PhD Thesis

Performances and installations

- The Good Ship Hibernia @ Audio Mostly Queen Mary University London 23-26 August 2017

- The Good Ship Hibernia @ The 2017 ICAD Concert, Playhouse Theatre, Pennsylvania State University, PA, USA 20-23 June 2017

- Doom & Gloom @ Irish sound Science & Technology Convocation, UL & MIC, Limerick, Ireland August 12-13 2015

- Doom & Gloom @ Sound and Music Computing Concert, NUI Maynooth, Ireland: July 30th -August 1st, 2015

- Doom & Gloom @ International Community for Auditory Display Concert, Forum Stadtpark, Graz, Austria: 8-10 July 2015

- Extensive Structure no 1, The Human Cost, Doom & Gloom @ Contemporary Music Centre Spring Salon Series, National Concert Hall, Kevin Barry Room March 24th 2015

- Idle Hands- A 31-Part Exploration Of Irish Unemployment From 1983 - 2014 In G Major @ Irish Sound Signal and Technology Convocation @ NUI Maynooth, Ireland August 2014

- Symmetric Relations and Hidden Rotations @ Irish Sound Science and Technology Convocation @ Ulster University September 7th-9th 2016

- Symmetric Relations and Hidden Rotations @ Sonorities Festival of Contemporary Music @ Belfast November 24-26 2016

Presentations

- Data Listening @ Discover Research Dublin 2015, Trinity College Dublin

- Sonification and the Digital Divide @ Digital Material Conference, National University of Ireland Galway, May 21st 2015

Radio Broadcasts

- The Good Ship Hibernia and the Hole in the Bottom of the World - Glór Mundo St Patricks Day Session 2018 - Glór Mundo - 93.7fm.

- Symmetric Relations and Hidden Rotations - Nova Sunday 13 September 2015 - Nova RTÉ lyric fm.

- No Output: Cellular Storm Nova Sunday 11 October 2015 - Nova RTÉ lyric fm.

- Extensive Structure No 1 - Nova Sunday 8 November 2015 - Nova RTÉ lyric fm.

Press

- Newspaper Article “Offaly man prepares for ‘unique’ musical performance in London”. The Offaly Express, 17 August 2017.

- CMC Salon Series Press Release

- The Offaly Express

- Glór Mundo 29th September 2017

- Glór Mundo 27th September 2017

Creative Skills

HCI Design. Interaction Design. UX Design. Sound Design. Music Composition. Data Sonification. Data Visualisation.

Research & Technical Skills

User Evaluations. A/B Testing. Perceptual Testing. Experimental Design. Distributed User Testing. Programming. Data Analysis. Audio Engineering. Recording. Data Analysis. Data Sonification. Data Visualisation.

Tags

Embodied Cognition. Sonification. Auditory Display. Stephen Roddy.

Hosted on GitHub Pages — Theme by orderedlist

Page template forked from evanca